What is Data Structures?

Data structures are specialized formats for organizing and storing data in a computer so that it can be accessed and modified efficiently. They are essential for managing and manipulating data in programming and software development. Different data structures offer various ways to handle data, each suited to particular types of operations and use cases.

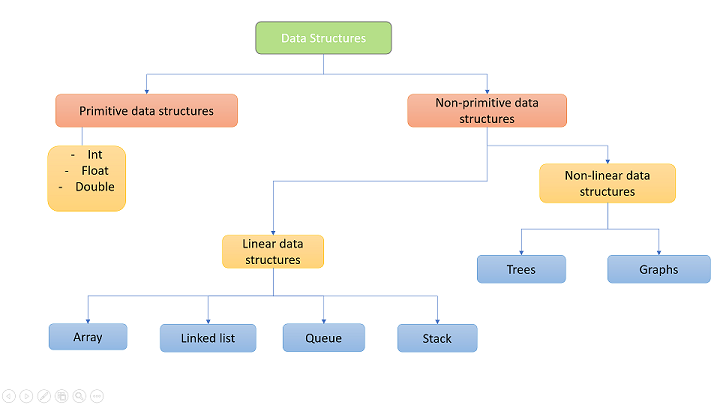

Types of Data Structures

1. Primitive Data Structures

- Integers, Floats, Characters: Basic data types provided by most programming languages, representing single values.

2. Non-Primitive Data Structures

- Linear Data Structures: Organize data in a sequential manner.

- Arrays: A collection of elements, all of the same type, stored in contiguous memory locations. Provides fast access but fixed size.

- Linked Lists: A sequence of elements where each element points to the next one. It allows dynamic sizing and efficient insertions/deletions but has slower access compared to arrays.

- Stacks: A collection of elements with Last In, First Out (LIFO) access. Useful for scenarios like function call management and undo operations.

- Queues: A collection of elements with First In, First Out (FIFO) access. Useful for scheduling and buffering.

- Non-Linear Data Structures: Organize data in a hierarchical or interconnected manner.

- Trees: A hierarchical structure with nodes, where each node has a value and pointers to child nodes. Types include:

- Binary Trees: Each node has at most two children.

- Binary Search Trees (BST): A binary tree where left child nodes have smaller values and right child nodes have larger values.

- Heaps: A complete binary tree used for priority queues, where the root node is either the maximum or minimum.

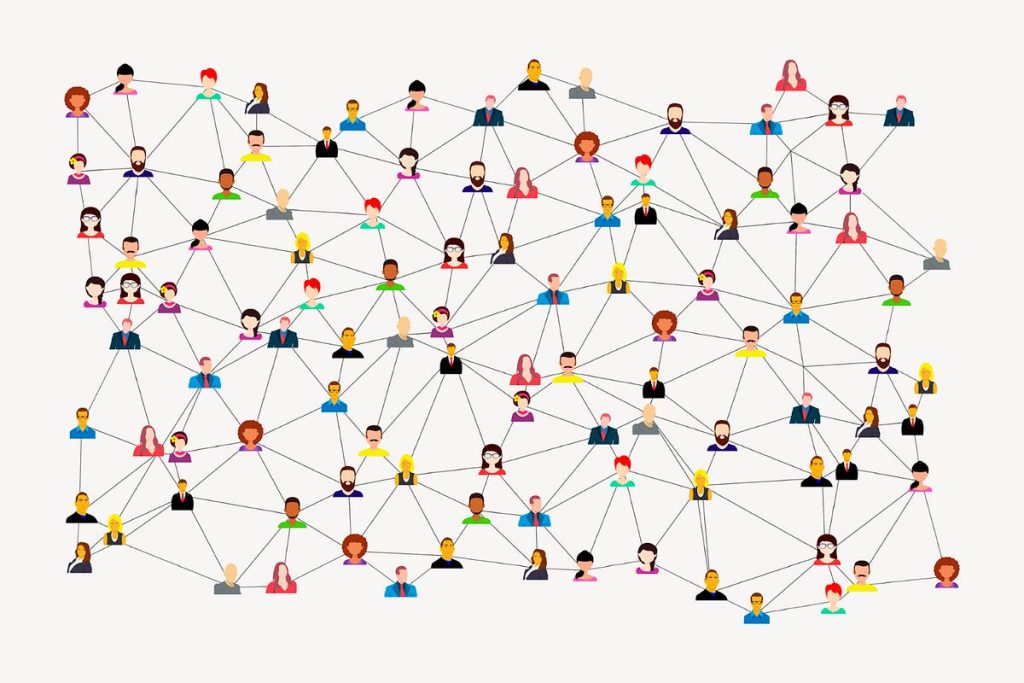

- Graphs: A collection of nodes (vertices) and edges connecting them. Can be used to represent networks, relationships, and paths. Types include:

- Directed Graphs: Edges have a direction.

- Undirected Graphs: Edges have no direction.

- Weighted Graphs: Edges have weights representing costs or distances.

- Hash Tables: A structure that uses hash functions to map keys to values, allowing for fast data retrieval and insertion. Useful for implementing associative arrays, sets, and dictionaries.

- Sets: A collection of unique elements with operations to add, remove, and check membership.

Key Operations on Data Structures

- Insertion: Adding new data.

- Deletion: Removing existing data.

- Traversal: Accessing each element in a specific order.

- Search: Finding a specific element.

- Update: Modifying existing data.

Choosing the Right Data Structure

The choice of data structure depends on the specific requirements of the application:

- Efficiency: Consider time complexity for operations such as insertion, deletion, and access.

- Memory Usage: Analyze the space complexity and memory overhead.

- Ease of Implementation: Choose a structure that simplifies implementation and maintenance.

Examples and Use Cases

- Arrays: Used for storing collections of data that need quick access by index (e.g., a list of student grades).

- Linked Lists: Useful for dynamic collections of data where frequent insertions/deletions are needed (e.g., a playlist of songs).

- Stacks: Ideal for tasks that follow LIFO order, such as function call management and undo functionality in text editors.

- Queues: Suitable for tasks requiring FIFO order, like job scheduling and buffering.

- Trees: Effective for hierarchical data (e.g., file systems) and efficient searching and sorting (e.g., binary search trees).

- Graphs: Used to model networks, such as social networks, transportation systems, and recommendation systems.

- Hash Tables: Great for fast data retrieval with unique keys (e.g., implementing dictionaries and caches).

How Data Structures Works?

Data structures work by organizing and managing data in specific ways to facilitate efficient access, modification, and storage. Here’s a detailed explanation of how different data structures operate:

1. Arrays

How It Works:

- Structure: An array is a collection of elements stored in contiguous memory locations. Each element can be accessed directly via its index.

- Access: Fast access to elements using indices (e.g.,

array[i]). Time complexity for access is O(1). - Insertion/Deletion: Inserting or deleting elements, especially in the middle, requires shifting other elements, which can be inefficient (O(n) time complexity).

Use Cases:

- Storing collections of data where the size is known and elements need to be accessed quickly (e.g., a list of student grades).

2. Linked Lists

How It Works:

- Structure: A linked list consists of nodes, each containing data and a reference (or pointer) to the next node. Types include singly linked lists (one pointer) and doubly linked lists (two pointers: next and previous).

- Access: Sequential access only; to find an element, you must traverse the list from the head node (O(n) time complexity for access).

- Insertion/Deletion: Efficient at inserting or deleting elements (O(1) time complexity) if the position is known, as it only involves updating pointers.

Use Cases:

- Dynamic collections where elements are frequently added or removed (e.g., implementing a queue or stack).

3. Stacks

How It Works:

- Structure: A stack follows the Last In, First Out (LIFO) principle. Elements are added and removed from the top.

- Operations:

- Push: Add an element to the top (O(1) time complexity).

- Pop: Remove the top element (O(1) time complexity).

- Peek: Access the top element without removing it (O(1) time complexity).

Use Cases:

- Managing function calls, undo operations in applications, and parsing expressions.

4. Queues

How It Works:

- Structure: A queue follows the First In, First Out (FIFO) principle. Elements are added at the rear and removed from the front.

- Operations:

- Enqueue: Add an element to the rear (O(1) time complexity).

- Dequeue: Remove an element from the front (O(1) time complexity).

- Peek: Access the front element without removing it (O(1) time complexity).

Use Cases:

- Task scheduling, buffering, and managing resources in operating systems.

Also Read : What is Algorithm?

5. Trees

How It Works:

- Structure: Trees consist of nodes connected by edges. Each node has a value and may have child nodes. Common types include:

- Binary Trees: Each node has at most two children.

- Binary Search Trees (BST): Nodes are arranged such that the left child has a smaller value and the right child has a larger value.

- Heaps: Complete binary trees used for priority queues, where each parent node is either the maximum or minimum.

- Operations:

- Traversal: Visiting each node in a specific order (in-order, pre-order, post-order).

- Search: Finding a node or value (e.g., O(log n) time complexity for BST).

Use Cases:

- Hierarchical data (e.g., file systems), efficient searching and sorting (e.g., BST), and priority management (e.g., heaps).

6. Graphs

How It Works:

- Structure: Graphs consist of nodes (vertices) connected by edges. Can be directed (edges have direction) or undirected (edges have no direction), and weighted (edges have weights) or unweighted.

- Operations:

- Traversal: Visiting nodes using algorithms like Depth-First Search (DFS) or Breadth-First Search (BFS).

- Shortest Path: Finding the shortest path between nodes (e.g., Dijkstra’s Algorithm).

Use Cases:

- Modeling networks (e.g., social networks, transportation systems), route finding, and network flow problems.

7. Hash Tables

How It Works:

- Structure: Hash tables use hash functions to map keys to values in an array. The hash function determines the index where a value is stored.

- Operations:

- Insertion: Add a key-value pair using the hash function (O(1) average time complexity).

- Search: Retrieve a value based on its key (O(1) average time complexity).

- Deletion: Remove a key-value pair (O(1) average time complexity).

Use Cases:

- Implementing associative arrays, dictionaries, and caches.

Conclusion

Data structures work by organizing and managing data in specific ways to facilitate efficient data handling and operations. Each type of data structure is designed to optimize certain operations, such as access, insertion, or deletion, and is chosen based on the needs of the application. Understanding how different data structures work helps in selecting the right one for solving specific problems effectively.

FAQ

1. Why are data structures important?

Data structures are crucial because they enable efficient data management and manipulation. Choosing the right data structure can significantly impact the performance of algorithms and software, affecting access times, memory usage, and overall efficiency.

2. How do arrays work?

Arrays store elements in contiguous memory locations and allow direct access using indices. They provide fast access but have a fixed size, making insertion and deletion operations less efficient compared to other structures.

3. How do linked lists work?

Linked lists consist of nodes where each node contains data and a reference (or pointer) to the next node. They support dynamic sizing and efficient insertions/deletions but require sequential access, which can be slower for searches.

4. What is a stack, and how does it work?

A stack is a linear data structure that follows Last In, First Out (LIFO) order. Elements are added (pushed) and removed (popped) from the top. It is used in scenarios like function call management and undo functionality.

5. What is a queue, and how does it work?

A queue is a linear data structure that follows First In, First Out (FIFO) order. Elements are added (enqueued) at the rear and removed (dequeued) from the front. It is used for task scheduling and buffering.